Your estimator uses AI to review bid documents. Your PM uses it differently for RFIs. Your field team doesn't use it at all.

That's not AI adoption. That's random experimentation.

Here's how to standardize AI across your company in a way that actually sticks.

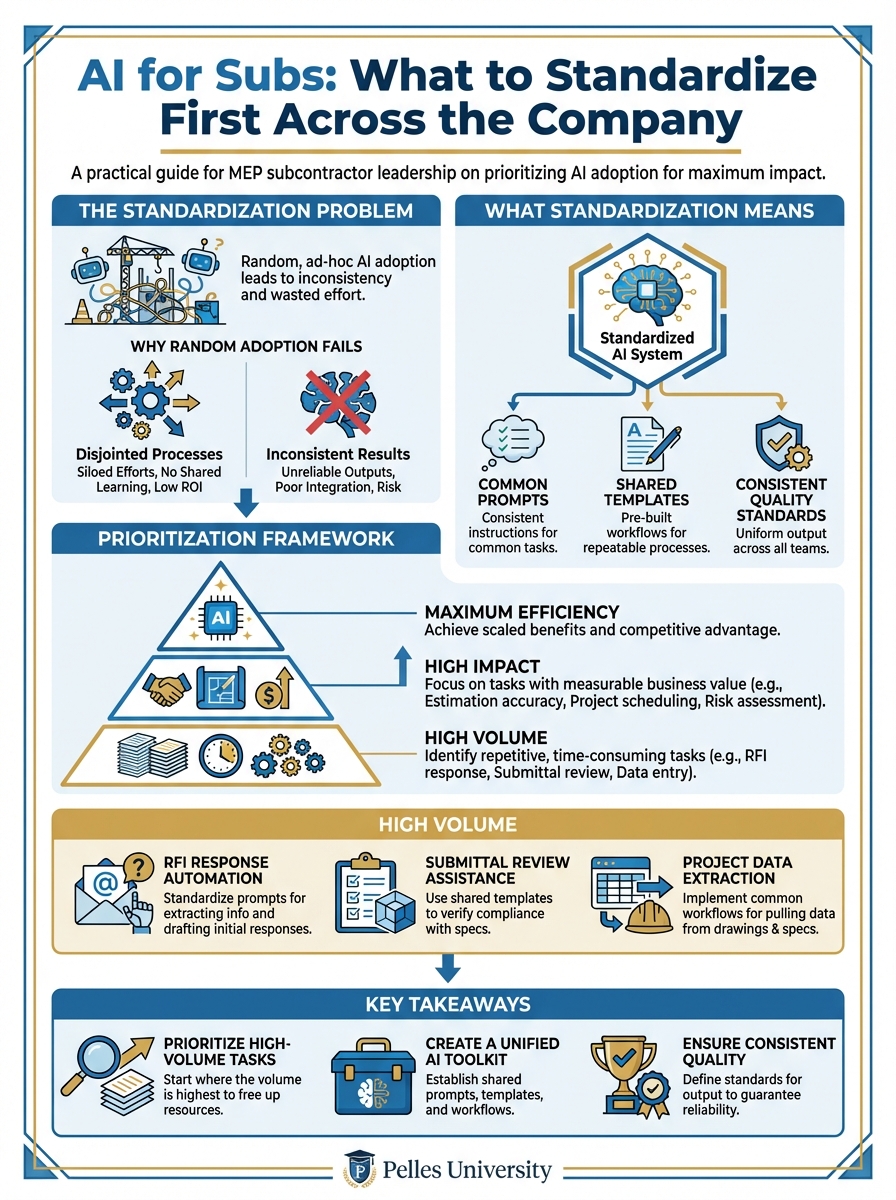

The Standardization Problem

Why Random Adoption Fails

Individual experimentation creates:

Inconsistent quality: One estimator catches scope gaps. Another misses them. Same tool, different results.

Knowledge silos: One person figures out a great workflow. Nobody else knows about it.

Wasted effort: Everyone reinvents the same wheel, slightly differently.

No scalability: What works for your best user doesn't transfer to new hires.

What Standardization Means

Standardization isn't about controlling how people work. It's about:

- Common prompts for common tasks

- Shared templates and workflows

- Consistent quality standards

- Transferable processes

Build once, use across the company.

Prioritization Framework

Not every process is worth standardizing first. Prioritize based on:

High Volume

How often does this happen?

- Bid review: Multiple times per week

- Submittal preparation: Every project

- Daily reports: Every day

High-volume processes give you more return on standardization investment.

High Impact

What's the cost of getting it wrong?

- Scope gaps: Direct margin loss

- Contract red flags: Legal and financial risk

- Change order documentation: Revenue recovery

High-impact processes justify more attention.

High Variability

How inconsistent is current performance?

- If everyone does it the same way: Lower priority for standardization

- If results vary by person: Higher priority

Variability indicates opportunity for improvement.

Low Complexity

How hard is it to standardize?

Start with processes that:

- Have clear inputs and outputs

- Don't require extensive training

- Can be templated

Build momentum with quick wins.

The Prioritized List

Based on these factors, here's the recommended order:

Tier 1: Start Here (Weeks 1-4)

1. Bid Package Triage

- Volume: Every bid

- Impact: Time savings + bid/no-bid quality

- Complexity: Low (document input → summary output)

Standardize: A prompt template that extracts key info from any bid package.

2. Scope Inclusions/Exclusions Check

- Volume: Every bid

- Impact: Direct margin protection

- Complexity: Low-medium (scope document → checklist)

Standardize: A checklist prompt that compares scope to specifications.

3. Specification Lookups

- Volume: Daily across multiple roles

- Impact: Time savings + accuracy

- Complexity: Low (question → cited answer)

Standardize: A question format that gets consistent, cited answers.

Tier 2: Build On Success (Weeks 5-8)

4. Contract Red Flag Review

- Volume: Every project

- Impact: Risk management

- Complexity: Medium (requires contract knowledge)

Standardize: A review prompt with company-specific flag criteria.

5. Submittal Requirements Extraction

- Volume: Every project

- Impact: Schedule protection

- Complexity: Medium (multiple spec sections)

Standardize: An extraction template for submittal registers.

6. RFI Drafting

- Volume: Multiple per project

- Impact: Response quality and timing

- Complexity: Medium (requires context)

Standardize: An RFI template with required elements.

Tier 3: Expand Systematically (Weeks 9-12)

7. Change Order Narrative

- Volume: Per change event

- Impact: Revenue recovery

- Complexity: Higher (requires entitlement understanding)

Standardize: A narrative template with documentation requirements.

8. Meeting Notes Extraction

- Volume: Weekly per project

- Impact: Action item tracking

- Complexity: Medium (transcript → structured output)

Standardize: An extraction format for consistent meeting documentation.

9. Daily Report Generation

- Volume: Daily

- Impact: Documentation quality

- Complexity: Medium (multiple inputs)

Standardize: A field report format with required elements.

Tier 4: Advanced Applications

10. Addendum Comparison

- Complexity: Higher (multiple documents)

- Impact: Revision management

11. QA/QC Checklist Generation

- Complexity: Higher (spec-specific)

- Impact: Quality control

12. Knowledge Base Building

- Complexity: Higher (organizational)

- Impact: Institutional knowledge

How to Standardize

Step 1: Document Current State

Before standardizing, understand how the process works today:

- Who does this task?

- What inputs do they use?

- What outputs do they produce?

- How long does it take?

- What are common errors?

Interview your best performers. Understand what makes them effective.

Step 2: Build the Template

Create a standard template that:

- Specifies the inputs required

- Provides the prompt structure

- Defines the expected output format

- Includes quality checks

Example: Bid Package Triage Template

INPUTS NEEDED:

- ITB document (PDF)

- Project specifications (PDF)

- Drawing list (if available)

PROMPT:

Review this bid package and extract:

1. Project name and location

2. Bid due date and time

3. Scope summary (what's being bid)

4. Key deadlines (site visit, RFI deadline, etc.)

5. Bonding requirements

6. Insurance requirements

7. Any unusual requirements or red flags

Format as a one-page summary I can share with the bid team.

[Attach documents]

OUTPUT CHECK:

- All dates verified against source documents

- Key requirements captured

- Red flags highlighted

Step 3: Pilot With One Team

Don't roll out company-wide immediately:

- Select one team or person

- Have them use the template for 2 weeks

- Collect feedback on what works and what doesn't

- Refine the template

- Document lessons learned

Pilots surface problems before they become company-wide issues.

Step 4: Train and Deploy

Once refined:

Training:

- Show the template and expected use

- Walk through example outputs

- Clarify quality standards

- Answer questions

Deployment:

- Make templates easily accessible

- Set expectations for use

- Provide support channel for questions

Reinforcement:

- Review outputs periodically

- Celebrate good examples

- Address issues quickly

Step 5: Measure and Improve

Track:

- Adoption rate (who's using it?)

- Quality of outputs (are they good?)

- Time savings (is it faster?)

- Error reduction (are we catching more?)

Use data to improve templates over time.

Building the Template Library

Organization

Create a central library:

AI Templates/

├── Bidding/

│ ├── bid-package-triage.md

│ ├── scope-check.md

│ └── clarifications-request.md

├── Contracts/

│ ├── red-flag-review.md

│ └── flow-down-check.md

├── PM/

│ ├── submittal-register.md

│ ├── rfi-drafting.md

│ └── change-order-narrative.md

└── Field/

├── spec-lookup.md

├── daily-report.md

└── qc-checklist.md

Template Format

Each template should include:

Header:

- Template name

- Purpose

- Last updated

- Owner

Inputs:

- What documents are needed

- What information is required

Prompt:

- The actual prompt to use

- Variables to fill in

Output:

- Expected format

- Quality checks

Examples:

- Good output example

- Common mistakes

Version Control

Templates evolve. Track changes:

- Date of update

- What changed

- Why it changed

- Who approved

This maintains quality as templates improve.

Overcoming Adoption Barriers

"My Way Works Fine"

Response: Show the data. Compare outputs. If someone's personal method is better, incorporate it into the standard.

"This Takes Too Long"

Response: Measure actual time. Include template use in the comparison. Usually faster once learned.

"I Don't Trust AI"

Response: Build verification into the process. AI draft + human review. Trust but verify.

"We're Too Busy"

Response: Start with highest-volume tasks. Time savings compound. Investment pays back quickly.

Leadership's Role

Set Expectations

Make it clear:

- Standardized workflows are expected

- Quality standards apply

- This is how we work now

Provide Resources

Invest in:

- Template development

- Training time

- Support resources

- Feedback channels

Model Behavior

If leadership uses standardized templates, teams follow. If leadership ignores them, so will everyone else.

Measure Progress

Track and share:

- Adoption rates

- Quality improvements

- Time savings

- Error reductions

What gets measured gets managed.

What's Next

Standardizing processes is step one. The next step is measuring the return on that standardization—so you can justify continued investment and identify the next opportunities.

TL;DR

- Random AI experimentation creates inconsistent results and knowledge silos

- Prioritize standardization by volume, impact, variability, and complexity

- Start with bid package triage, scope checking, and spec lookups

- Build a template library with clear inputs, prompts, outputs, and quality checks

- Pilot with one team, refine, then deploy company-wide with training and measurement