You bought the AI tool. You sent the training link. Three months later, two people use it regularly. Everyone else went back to their old process.

This is the typical AI adoption story. It's not a technology failure—it's a change management failure.

Here's how to get AI adoption that actually sticks.

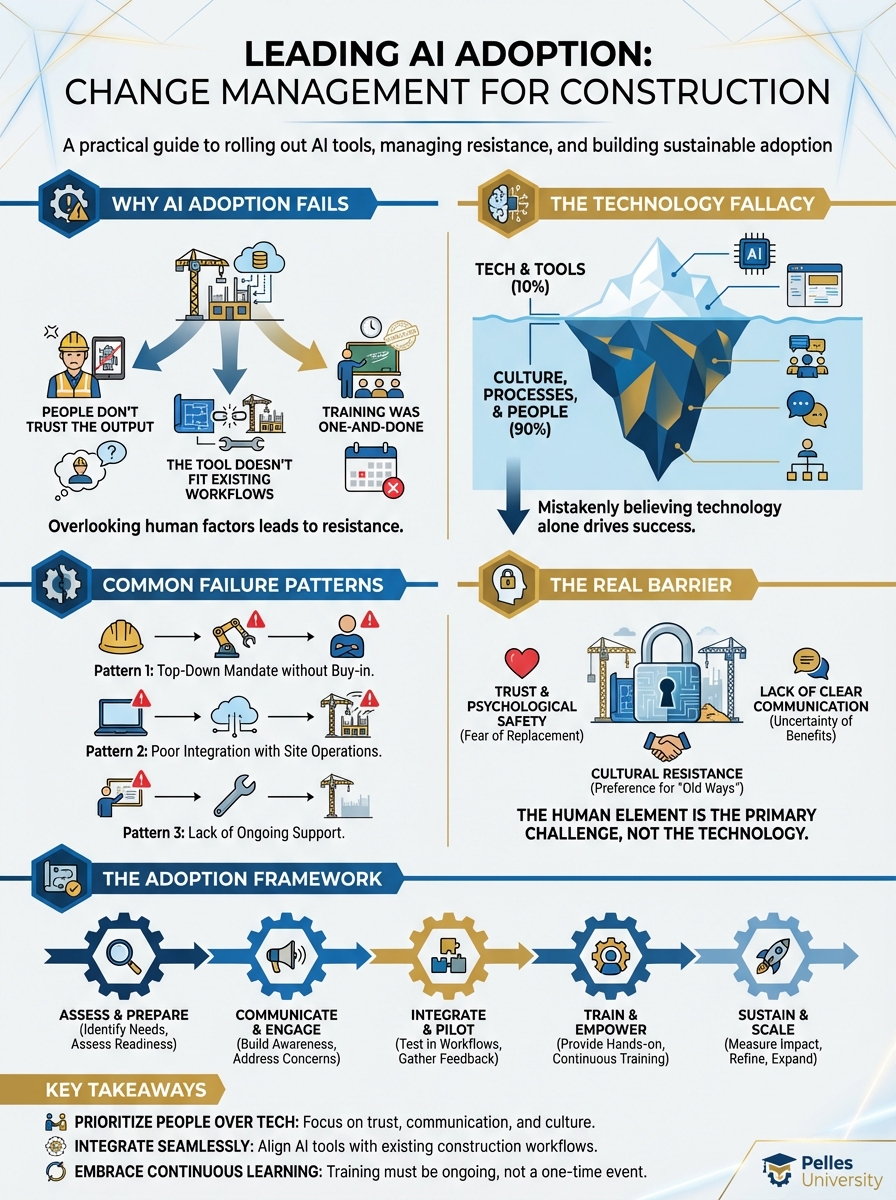

Why AI Adoption Fails

The Technology Fallacy

The assumption: If we buy good technology, people will use it.

The reality: Technology adoption is 20% tool quality and 80% change management.

Great AI tools fail when:

- People don't trust the output

- The tool doesn't fit existing workflows

- Training was one-and-done

- No one reinforces the new behavior

- Early problems aren't solved quickly

Common Failure Patterns

The Big Bang: Rolling out to everyone at once, overwhelming support capacity and creating frustrated users.

The Email Announcement: Sending a link with "start using this" and assuming adoption happens.

The Mandate: Requiring use without building capability or addressing concerns.

The Pilot That Stays a Pilot: Never expanding beyond initial users despite success.

The Abandoned Rollout: Launching with enthusiasm, then moving on without reinforcement.

The Real Barrier

The real barrier isn't technology. It's:

Fear: "Will this replace me?" Comfort: "My current process works fine." Trust: "How do I know it's accurate?" Time: "I don't have time to learn something new." Experience: "We tried something like this before and it failed."

These are human concerns. They need human solutions.

The Adoption Framework

Phase 1: Foundation

Understand the Current State

Before introducing change:

- How do people work today?

- What pain points exist?

- What's worked before?

- What's failed before?

- Who influences others?

Don't assume you know. Ask.

Define the Target State

Be specific about what success looks like:

- Who will use the tool?

- For what specific tasks?

- What outcomes matter?

- How will you measure?

Vague goals produce vague results.

Identify Champions

Champions are:

- Respected by peers

- Open to new approaches

- Willing to help others

- Not necessarily the most tech-savvy

Find 2-3 people who will lead by example.

Phase 2: Pilot

Select the Right Use Case

Good pilot use cases:

- Clear pain point that AI addresses

- Measurable improvement possible

- Limited scope (not everything at once)

- Visible results to build momentum

Bad pilot use cases:

- Complex workflows requiring many changes

- Results that take months to see

- Low-value tasks no one cares about

Start Small, Prove Value

Pilot structure:

- 3-5 users maximum

- Single use case

- 4-6 weeks duration

- Weekly check-ins

- Clear success metrics

Support Intensively

During pilot:

- Be available for questions

- Solve problems same-day

- Collect feedback continuously

- Adjust approach as needed

Early users need more support, not less.

Phase 3: Early Wins

Capture and Share Success

When the pilot works:

- Document specific outcomes

- Quantify time saved or quality improved

- Get testimonials from users

- Share with broader organization

Real stories from peers are more convincing than vendor claims.

Address Failures Honestly

When things don't work:

- Acknowledge the issue

- Explain what you learned

- Describe what changes

- Try again with adjustments

Pretending failures didn't happen destroys trust.

Phase 4: Scaled Rollout

Expand Deliberately

Don't go from pilot to everyone. Expand in waves:

- Wave 1: Pilot users (5-10 people)

- Wave 2: Willing adopters (15-25 people)

- Wave 3: General population (everyone else)

Each wave should stabilize before the next.

Train Appropriately

Training should be:

- Hands-on, not lecture

- Role-specific, not generic

- Short sessions, not marathons

- Followed by practice time

- Supported by reference materials

One training session isn't enough.

Create Support Systems

As you scale:

- Internal help resources

- Quick-reference guides

- Regular office hours

- Peer support network

- Escalation path for issues

People need help after training, not just during.

Phase 5: Reinforcement

Make It Part of the Process

Sustainable adoption means:

- AI use becomes the expected approach

- Workflows assume AI assistance

- Quality checks include AI outputs

- Success metrics track AI impact

When AI is optional, it becomes unused.

Celebrate Progress

Recognition reinforces behavior:

- Share success stories

- Recognize heavy adopters

- Quantify organizational impact

- Connect to business outcomes

People repeat what gets recognized.

Continuously Improve

Adoption isn't complete:

- Collect ongoing feedback

- Address emerging issues

- Add new use cases

- Update training as tools evolve

Treat adoption as ongoing, not a one-time project.

Handling Resistance

Understanding Resistance

Resistance usually has roots:

Fear of replacement: "If AI can do my job, why do they need me?"

Fear of failure: "What if I can't learn this? Will I look incompetent?"

Valid concerns: "Last time we tried new technology, it was a disaster."

Workflow protection: "I've spent years developing my process. Why change?"

Don't dismiss resistance. Understand it.

Addressing Common Concerns

"AI will replace me"

Response: "AI handles the tedious parts so you can focus on the judgment and decisions that require your experience. The people using AI effectively become more valuable, not less."

"I don't trust AI output"

Response: "You're right to verify. Let's look at how to check AI outputs efficiently. Your expertise in evaluating results is what makes AI useful."

"I don't have time to learn"

Response: "Learning takes time upfront but saves time after. Let's find a specific task where you'll see the time savings quickly."

"This is just a fad"

Response: "You might be right. Let's try it on something specific and see if it's actually useful for your work. If not, we'll know."

Converting Skeptics

Some skeptics become the best advocates:

Engage directly: Ask for their specific concerns Address honestly: Don't oversell or minimize Invite participation: "Help us figure out if this works" Show evidence: Real results from their peers Be patient: Trust builds slowly

Forced compliance creates resentment. Genuine conversion creates champions.

When to Move On

Some people won't adopt:

Recognize: Not everyone will embrace every change Minimize: Ensure non-adopters don't block others Document: Keep records of training offered Continue: Focus energy on willing participants

Don't let holdouts consume all your change management energy.

Measuring Adoption Success

Leading Indicators

Early signs adoption is working:

- Users logging in regularly

- Questions being asked

- Use cases expanding

- Peer recommendations happening

- Workflow integration occurring

Lagging Indicators

Outcomes that prove value:

- Time saved on specific tasks

- Quality improvements measured

- Errors or issues caught

- User satisfaction scores

- Business impact quantified

What to Track

Usage metrics:

- Active users / Total trained

- Frequency of use

- Features utilized

- Use cases applied

Outcome metrics:

- Time saved per task

- Quality scores

- Issues identified

- Cost impact

Adoption metrics:

- Training completion

- Time to first use

- Time to regular use

- Expansion to new use cases

Reporting Progress

Share adoption progress regularly:

Weekly during rollout:

- New users onboarded

- Issues encountered and resolved

- Early wins achieved

Monthly after rollout:

- Adoption rates

- Outcome metrics

- User feedback themes

- Next expansion plans

Visibility maintains momentum.

Role of Leadership

Executive Sponsorship

Adoption needs visible leadership support:

Active participation:

- Executives using the tools themselves

- Speaking about AI in company communications

- Asking about AI use in meetings

- Allocating resources for adoption

Not just endorsement: "The CEO said use it" isn't enough. Visible, ongoing support matters.

Middle Management

Middle managers make or break adoption:

Enable or block:

- Do they give people time to learn?

- Do they model the new behavior?

- Do they solve adoption problems?

- Do they measure and reinforce?

The squeeze: Middle managers often feel pressure from both sides. Support them specifically.

Peer Influence

Colleagues influence each other:

Create peer networks:

- User communities

- Office hours with peer experts

- Shared best practices

- Success story sharing

Peer recommendations carry weight.

Sustaining Long-Term Adoption

Building Habits

Adoption becomes habit through:

Repetition: Regular use builds automaticity Rewards: Positive outcomes reinforce behavior Triggers: Workflow cues prompt tool use Simplicity: Easy actions are repeated

Avoiding Regression

Prevent backsliding:

Monitor usage: Track trends, not just snapshots Address drops quickly: Investigate declining use Refresh training: Periodic updates for existing users Evolve use cases: Keep expanding value

Expanding Over Time

Once core adoption is stable:

Add use cases: What else can the tool help with? Deepen expertise: Advanced training for power users Extend reach: New teams or departments Integrate further: Connect to other workflows

Adoption is a journey, not a destination.

Using AI to Drive AI Adoption

Creating Training Materials

Create a 10-minute training outline for teaching estimators

to use AI for specification review.

Include:

- Opening hook (why this matters)

- Demonstration of specific workflow

- Hands-on practice exercise

- Common mistakes to avoid

- Reference resources

Target audience: Experienced estimators new to AI tools.

Addressing Resistance

Help me prepare responses for these common objections to

AI adoption in our construction company:

1. "I've been doing this for 20 years without AI"

2. "What if the AI is wrong?"

3. "This will slow me down"

4. "We tried software before and it didn't work"

Keep responses respectful and evidence-based.

Measuring Progress

Create a monthly AI adoption report template that includes:

- Usage metrics (active users, frequency)

- Outcome metrics (time saved, quality)

- User feedback summary

- Issues and resolutions

- Next month priorities

Format for presentation to leadership.

What's Next

Successful AI adoption creates the foundation for continuous improvement. As teams become comfortable with AI assistance, the next step is optimizing how AI fits into your broader operational workflows and measuring the compounding returns from AI-enhanced processes.

TL;DR

- AI adoption fails due to change management, not technology problems

- Start with willing champions and a single valuable use case

- Pilot before scaling—prove value, then expand in waves

- Address resistance by listening and responding to genuine concerns

- Measure leading indicators (usage) and lagging indicators (outcomes)

- Adoption requires sustained support, not one-time training